How does it feel to be diagnosed with Alzheimer's?

We Are Alfred, a VR experience for the Oculus Rift, sought to help its users understand this feeling. As an interaction design consultant, I helped improve the immersion in one of Alfred's pivotal scenes.

Balancing Immersion and Interactivity

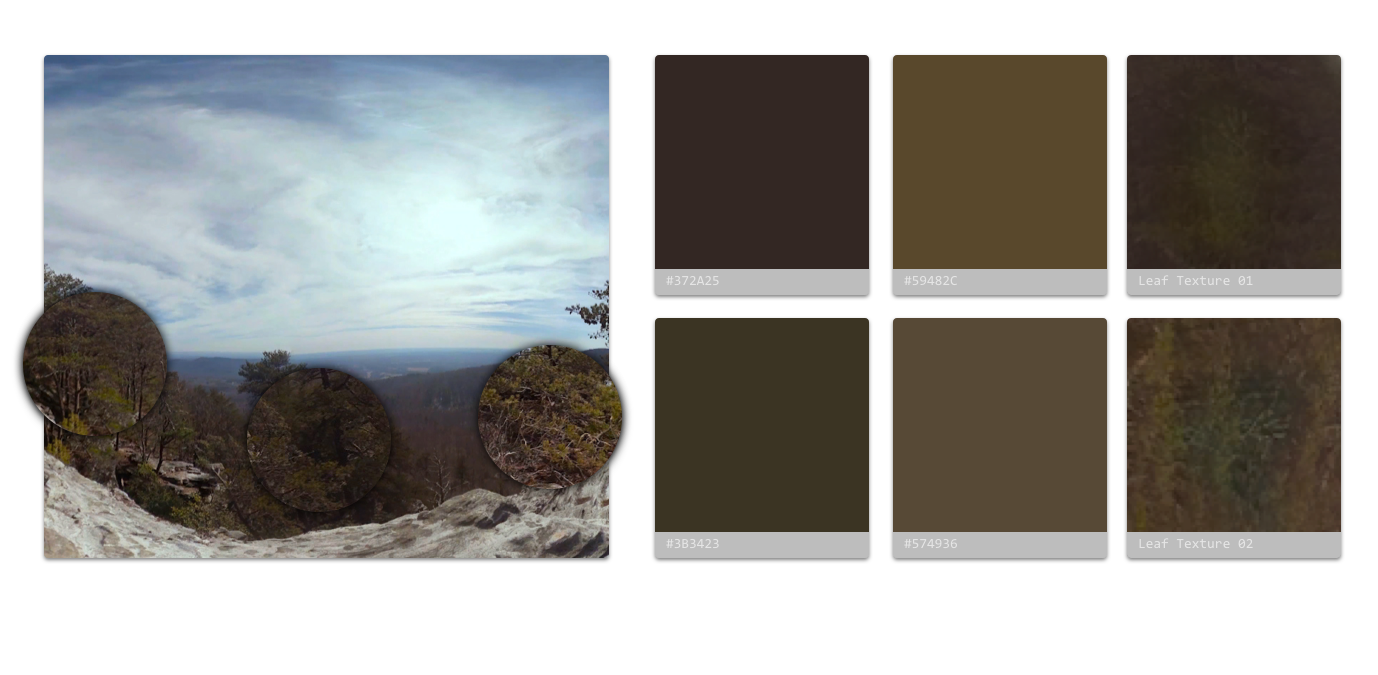

The Embodied Labs team needed to strike a balance between engaging the user through interactive elements of the narrative, and immersing them in the beautifully-shot, 360° scenes. Their early prototypes provided user interaction, but the 3D models didn't blend well with the 360° video, and user testing showed that this broke the viewer's suspension of disbelief. This was especially true in the Dream scene, shown here.

Narrative Task Model

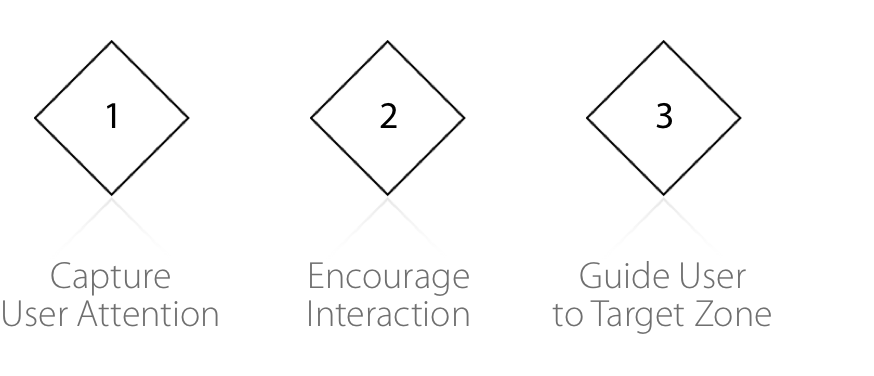

The Dream scene also played a special importance in the VR film's narrative. This provided a specific constraint to the interaction possibilities. Whatever the interaction was, it had to move the user's hand towards a target zone in order to simulate their knocking over a wine glass, a key mechanic for the following scene.

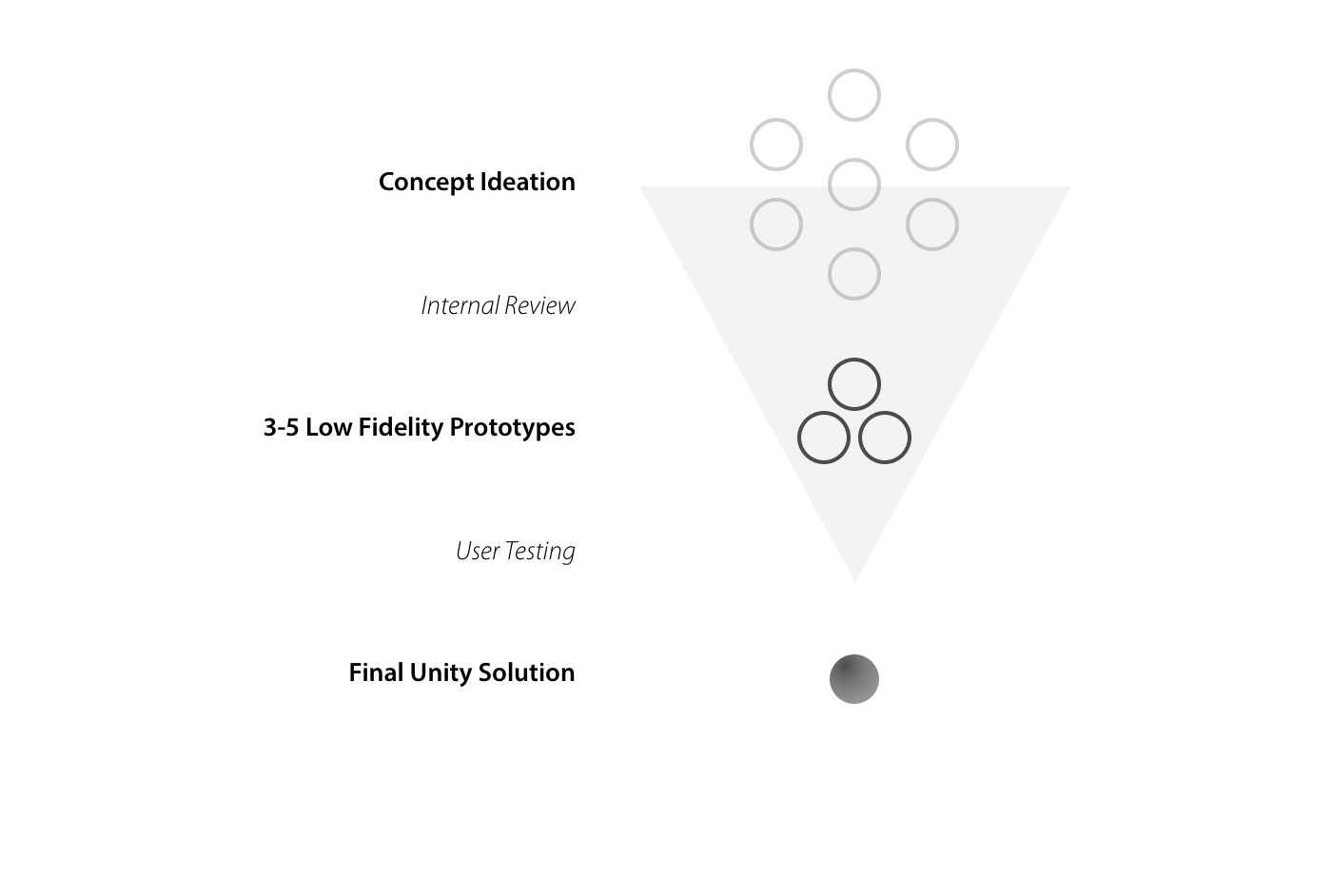

Rapid Ideation & Prototyping

I felt it was important to explore multiple ideas at low fidelity before devoting development time to a single VR solution. As such, I led an iterative process that began with paper sketches before moving to final concept development in Unity.

Prototyping.

Working across Unity and Maya, I generated four concepts that best fit the requirements of the scene: those that would blend into the video background, made sense based on the narrative, and focused on hand-object interactions.

After a brief round of testing with the Embodied Labs team, it was decided that the "leaves" prototype maintained the viewer's sense of immersion, while still providing the necessary interactivity to the scene.

Asset Modeling

I used Maya to model the final leaf geometry.

Textures

When constructing the materials for the leaf, I sampled frames from the video to better blend the mesh with the surroundings, creating the initial illusion that the leaves were part of the video.

Scripting

I wrote a custom instantiation script to ensure that the path of the leaves guided the user towards the 'target zone' in the bottom right quadrant of the view.

Deployment

I worked with Embodied Labs' lead developer to integrate the final prototype into the production build for the Oculus Rift CV1.